AI for Good: Progress Towards the Sustainable Development Goals

From healthcare, to manufacturing, even to how we get the food we eat, artificial intelligence (AI) is changing our world—and fast. AI has been around since the 1950’s but the explosion of data from connected devices and recent improvements in processing power, led to AI-driven innovations in every sector. More than half of the 340,000 AI related patents have been proposed in the past five years.

AI (and related exponential technology) is the most important change force on earth. As we noted in our report Ask About AI, it will do more to impact lives and livelihoods of school aged children than any other force. Our work, play and commerce is quickly becoming augmented by AI. Many tasks are being automated creating both opportunity and displacement.

AI is also improving educational opportunities and access for learners worldwide. Built into adaptive technology and tools, AI is alive in many classrooms already—it is supporting scheduling, transportation, nutrition and funding decisions. AI is influencing talent and hiring decisions, tutoring programs and mentoring of young learners. As educators, we need to be aware of how AI is already being used and of the questions to ask when it is being deployed.

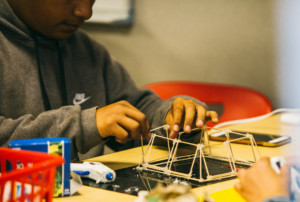

Most exciting is the potential to use AI to create extraordinary benefit—curing disease, advancing clean energy, promoting sustainable transportation and enabling smart cities. And it’s not just the work of computer scientists—schools and communities worldwide are beginning to engage young people in tackling local challenges using smart tools. The movement is called #AIforGood.

AI for Good Global Summit

At the third annual AI for Good Global Summit, leaders and innovators from around the world met to discuss this rapid rate of change and progress AI is bringing to these different sectors (including education) and to discuss how to harness AI for positive progress.

Secretary-General of ITU Houlin Zhao, shared that the Summit is “… the place where AI innovators connect to identify practical applications of progress towards the UN Sustainable Development Goals (SDGs).”

ITU, along with XPrize, Microsoft AI and the Association for Computing Machinery (all primary sponsors and organizers of the event), demonstrated their commitment not only to identifying pathways to progress, but also to addressing the ethical issues, government policies and requirements we might consider for companies, developers and users of AI tools.

James Kwasi Thompson, Minister of State for Grand Bahama, continually stressed that good use of AI will always be about how we are keeping the people at the center of our decision and how it will be used for good. He shared, “I always ask myself how will AI solve the problem facing a group of people in my country? With fewer and fewer resources in some communities, it actually means a greater need for creativity and for innovation. AI should not replace workers, but it should enhance those workers and their contributions, especially in developing countries.”

AI could massively exacerbate the inequities we see in the world if we don’t keep the user and people at the center. The conference was divided into breakthrough tracks so attendees could dive deep, co-create potential project ideas to solve AI challenges and address these inequities.

Breakthrough Tracks:

- AI Education: Reaching and Engaging 21st Century Learners

- Good Health and Well-Being

- AI, Human Dignity & Inclusive Societies

- Scaling AI for good

- AI for Space

Predominant Themes

Four predominant themes emerged throughout the keynotes and these breakthrough tracks.

Design for Inclusivity and Fighting Bias. There is a continued need for a multi-angled approach to addressing and developing solutions that eliminate increasing biases, promote a celebration of diversity and increased inclusivity. These continue to be non-negotiables in the design of AI, especially if we are working towards doing good while embracing the SDGs.

Timnit Gebru (@timnitGebru) of Google and Black in AI pushed us to always ask the questions:

- Should we do this? Should we use AI in this instance?

- Are the tools Robust enough to do not just a good job—but a just job?

Gebru knows as a computer scientist that baked into algorithms are biases, stereotypes and assumptions about who we are and our behaviors. Her work in facial recognition (amongst other studies) proved that AI can have major biases and perpetuate inequities.

Helene Molinier, Senior Policy Advisor on Innovation at UN Women also brought to light just how underrepresented women are in the field and how that can influence the design and decisions that are made. If we want to create more opportunities and transform our future world, we have to dismantle existing systems and not use these principles to inform AI.

Khalil Amiri, Vice Minister for Scientific Research from the Republic of Tunisia, also talked about the threat of creating bigger income and education gaps if we aren’t careful, but on the counter (if done well) there is a great opportunity for inclusivity. “If we train ourselves well—there will be many many new jobs. AI education must be inclusive and open opportunities for everyone and could reduce inequalities. There will be a transformation of jobs, not elimination. We need good education otherwise you will not be able to access these new jobs.”

Collective Responsibility. Building on the first theme, it is our collective responsibility in governance, design and implementation of AI to consider the possible ramifications of use and bias, diversity and inclusivity—not someone else’s responsibility. The privacy, data and security considerations are countless and it is our collective ethical, moral and societal responsibility to not ignore them. Fabrizio Hochschild Drummond of the Executive Office of the Secretary-General (EOSG), shared “Change will never happen this slowly. We collectively cannot view this as competition. It is decentralized approaches from stakeholders and dissemination of principles that will help us make positive progress.”

Jim Hagemann Snabe, Chairman of the Board of Siemens, reminded us that we must keep key principles of trust, accountability and enhancement core to our AI work.

Growing Opportunities. There are endless ways in which we could harness the power of AI. Each speaker shared a unique use of AI that may or may not be readily apparent. Shlomy Kattan of XPrize shared uses in website usage to identify potential cases of learners at risk for suicide. Jean-Philippe Courtois of Microsoft (see AI education examples below), Yves Daccord of the Red Cross and many others shared how AI has been pivotal when applied to humanitarian causes, disaster prediction and relief and global health crises.

Accelerate AI Education/Awareness and Learning Enhanced with AI. Maybe most relevant to the Getting Smart audience is how AI matters in and for, the field of education. I find that the need is two fold—both learning and awareness about AI and learning with AI. Tara Chklovski (@TaraChk) of Iridescent Learning (see recent podcast) led the AI in Education breakthrough track. It was evident from not only her work and also those of others in the room that we are still on the cusp of learning about AI in many communities, but that there are growing areas where learning with AI is more mainstream.

Communities around the world are gaining increased exposure to how AI can help them solve their local issues and challenges. Schools are infusing AI tools, both adult and student facing, to enhance learning. Minecraft, one of many examples shared, will let learners test their AI creations and share them with the world.

My biggest takeaway is AI will help us learn, but it won’t replace our need for strong, interpersonal teaching and relationships. AI can enhance and take some of the time consuming tasks, possibly grading, scheduling and skill work, so that educators have more time to work with learners and get to deeper learning. The freed mental space and capacity could lead to more time for creativity, relationship building and deep work with students. However, we also need to be asking important ethical questions about things such as implementation, potentially too much reliance on the technology and student privacy.

We need to accelerate the awareness and education about AI so families and learners have agency over their information and learning. We also need to embrace that AI can enhance learning and accelerate our work in schools if we are informed about the opportunities it can bring.

The 4th iteration of AI for Good will be held May 4th-9th of 2020 in Geneva. Be sure to check it out and follow our Future of Work campaign for more on AI in education.

For more, see:

- Ask About AI: The Future of Learning and Work

- Podcast: Using Artificial Intelligence to Solve Problems in Communities

- AI Family Challenge Introduces Artificial Intelligence, Encourages Problem Solving

This post is a part of the Getting Smart Future of Work Campaign. The future of work will bring new challenges and cause us to shift how we think about jobs and employability — so what does this mean for teaching and learning? In our exploration of the #FutureOfWork, sponsored by eduInnovation and powered by Getting Smart, we dive into what’s happening, what’s coming and how schools might prepare. For more, follow #futureofwork and visit our Future of Work page.

0 Comments

Leave a Comment

Your email address will not be published. All fields are required.