Why You Need More Than “One Good Study” To Evaluate EdTech

Andrew Coulson

This post was originally published by MIND Research.

You probably wouldn’t be surprised to hear that every education technology (EdTech) publisher says their product works, and they all have some sort of supporting evidence. But oftentimes that evidence—if it’s fully experimental—is very scarce. In many cases, it’s just one study. Yet just that one piece of “gold standard” evidence is often considered good enough by educators when making a purchasing decision.

But it shouldn’t be.

Educators aren’t the only ones stuck in this “one good study” paradigm. Highly credible EdTech evaluation lists give top marks for just one RCT (randomized controlled trial). Meanwhile, any other program with studies not meeting the RCT bar of rigor is, by comparison, downgraded—no matter how many other high-quality studies they may have, under how many different conditions, in what timeframe, or even with repeated positive results.

The Problem: Gold Standard EdTech Studies are Rare

The biggest problem with relying solely on fully experimental RCT studies in evaluating EdTech programs is their rarity. In order to meet the requirements of full experiments, these studies take years of planning and often years of analysis before publication. These delays cause a host of other challenges:

- The Product Has Changed: RCT studies can take years to complete, so the product used in the study is often out-of-date. Over time, programs are continually revised to meet new standards, to improve and add product components, to change (and sometimes reduce) program usage requirements and support. None of these changes are captured by an out-of-date study.

- All School Districts Are Not The Same: RCT studies often use only one school district, due to the extensive planning and complexity of a full experimental roll-out and student randomization. But school districts differ in many ways—from technology, to culture, to student subgroup distributions. How do you know the study results are applicable to the specifics at your district?

- Different States, Different Assessments: In a single study, only one assessment (state test) is used, so results are specific to that assessment. But assessments differ widely (still) from state to state in level, type and emphasis. Results may vary. Moreover, even within one state, the assessment can change from year to year. If the study used a different assessment, how do you gauge the results validity against your state’s current test?

- Limited Grade Levels: RCT studies typically cover a specific grade level band, not all grades offered. Yet teachers, usage, and content vary tremendously from K-2, to 3-5, to secondary. Inferring validity of results into unstudied grade bands is questionable due to different levels of content, different teaching methods, different student ages, and different assessments. How do you know the program works for all the grade levels you plan to adopt?

Altogether, these issues demonstrate the often limited relevance of relying on “one good study.”

Extrapolating one study’s results to your situation is not fully valid unless the study was performed on a district like yours, on the grade band you’re planning on using, with a student subgroup mix like yours, with usage like you plan to adopt, on your most recent assessment, and with the program revision you’ll be using.

The New Paradigm: Repeated Results

The time has come for a shift in how we evaluate EdTech programs. Rather than relying on one “gold standard” study, we should be looking at a large number of studies, using recent program versions, garnering repeatable results, over many varied districts. Quasi-experiments can study the adoption of a program as is, without requiring the complexity and time that up-front experiment planning takes. Methods of matching and comparing similar schools with and without the program can be made statistically rigorous and powerful. And if we study at the grade-level, we have the average test performance data universally available on state websites. It is then possible to do a quasi-experimental study on any large enough school cohort. If, instead of relying on RCT’s alone, we pay attention to quasi-experiments, a much higher number of studies is possible.

Crucially, a larger number of studies enables buyers to evaluate repeatability. Why is repeatability so important? Because even the “gold standard” results of a single study in the social sciences have very often failed to be replicable. In fact, published studies are allowed a 5% chance of drawing a false conclusion; how does one know that one “gold standard” study was not itself a cherry-picked or fluke result? So, “one good study” is not enough evidence. Reliable results as evidenced by replication from a lot of studies need to be the new normal.

Imagine this new paradigm with a large number of recent studies—let’s say five or more. This can allow us to look for consistent patterns over multiple years, across grade levels, and especially across different types of districts and assessments. This paradigm shows its rigor through repeatability, and adds vastly improved validity with respect to:

- Recent version of the program, training and support;

- A real-world variety of types of use, districts, grade-levels, teachers, and student subgroups;

- Patterns of results across many different assessments.

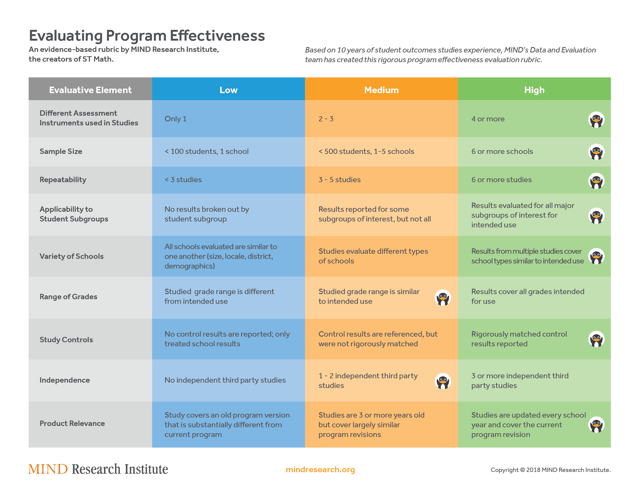

At MIND, we believe a high volume of effectiveness studies is the future of a healthy market of product information in education. To illustrate and promote this new paradigm, we’ve created a program evaluation rubric.

Download the Program Evaluation Rubric (with ST Math notation)

Download the Program Evaluation Rubric (with ST Math notation)

Download the Program Evaluation Rubric (blank)

While MIND has not yet achieved the highest standard in each of these rubric sections, we are driving toward that goal as well as annual, transparent evaluations of results of all school cohorts. We’ve already been able to do just that in grades 3, 4 and 5. We want our program to be held accountable for scalable, repeatable, robust results—it’s how the program will improve and student results will grow.

Effective learning is important enough that there should be studies published every year covering every customer. We believe MIND is ahead of the curve on study volume, but we are not alone. For example, districts are starting to share the results of their own many studies with peers on LearnPlatform, which will eventually create a pool of many studies covering a variety of conditions for any program. With more relevant information to inform their purchasing decisions, educators can find the best product fit for their students.

For more, see:

- Designing Pilot Programs for Schools and Districts

- Pilots Offer a Promising Path to Competency-Based Education

- Getting Smart Podcast | Using Pilot Programs as a Foundation for Innovation

Andrew Coulson is Chief Data Science Officer at MIND Research. Connect with him on Twitter: @AndrewRCoulson

Stay in-the-know with all things EdTech and innovations in learning by signing up to receive the weekly Smart Update.

0 Comments

Leave a Comment

Your email address will not be published. All fields are required.