It’s Time for The Next Big Advance: Comparable Growth Measures

The most important next step in the shift to personal digital learning is the need for comparable growth measures for individual students.

With the explosion of adaptive assessment and instruction and the widespread use of digital curriculum with embedded assessments, there is a growing need to be able to compare measures of student progress.

Scarcity. States began introducing achievement tests in the 1990 (as I discussed in a case for Common Standards and Better Tests). We didn’t have much performance data about students, so we started using the year end summative assessments for four different jobs:

- improving teaching and learning,

-

managing student matriculation,

-

evaluating teachers, and

-

ensuring school quality.

The problem is that these year end criterion reference tests were designed to determine if students were on grade level. For students above or below grade level, they are less useful in measuring academic growth over time.

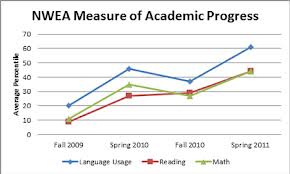

Abundance. With the shift to digital learning, we’re seeing an explosion of content embedded and adaptive assessment. These new tools provide rapidly improving ability to draw inference about a student growth trajectory and compare his/her path with another student in a different learning system. Adaptive assessments like NWEA, i-Ready, and Scantron provide an accurate measure of growth

These new assessments are becoming the first source of data for improving teaching and learning. With the growth in competency-based environments, new measures help students demonstrate proficiency. However, none of this data is currently comparable. NWEA will give you a different measure than Scantron, and neither will match the state growth estimate.

To begin to use a variety of assessments to manage student progress in competency based system, we need good-enough comparability across different systems and correlation with summative growth measures. For example, growth of 1.2 years in a Dreambox school should equal growth of 1.2 years in an i-Ready school and the Smarter Balanced test results should yield something close to 1.2 years of growth.

Multiple sources of achievement data–content embedded assessment and teacher observation–should be automagically collected in super gradebooks and should produce composite growth measures. Achievement levels and growth measures should be presented to students, teachers and parents in data visualization tools that facilitate regular discussions about academic progress.

Next steps. The components to a growth model probably include:

-

the scale upon which changes in student learning may be measured (e.g. Lexile/Quantile);

-

the model used to describe growth on the scale (e.g in scale score changes, percentiles, grade level equivalents);

-

the model to measure the comparability of various forms of assessment;

-

the model used to determine if the growth is meaningful and significant; and

-

the instructional and accountability inferences made based on the observed growth.

The state consortia will develop the scale and some way to describe movement on the scale. The last couple items are wrapped up in state politics and policies, ESEA waivers, RTT plans, and local preferences.

“I think the next important step for the nation will be when SBAC establishes its proficiency standards in Summer of 2014, said Tony Alpert of Smarter Balanced. “At that point, the vertical articulation of the expectation for proficiency across the grades, the associated achievement level descriptors and the exemplar items that are associated with the cut-scores at the proficient levels will serve as early indications of the nations’ expectation of growth over the grade spans.”

Going online. With the growth of full and part time online learning, the need to compare providers is quickly expanding. Comparable growth measure is key to accurately measuring the value-added contribution.

We’re writing a paper about online learning and will discuss the importance of better growth measures. Let me know if you have thoughts on next steps on growth measures.

.

Tom Vander Ark

If it's not clear, here are the three problems I think we need to solve:

Problem 1: provider assessments don't match state estimates of growth

-Suggests need for 1) better state assessments and 2) correlation between formative & summative

Problem 2: schools are increasingly using multiple instruction/assessment products with no way to combine them into a unitary reporting system to 1) improve instruction and 2) manage student progress.

-Suggests common index (like Lexile) to which other assessments can be correlated so that everyone can produce growth measures in the same units on the same scale (but, perhaps with different confidence intervals)

Problem 3: Huge summative exams take days to administer.

-When every student is learning in a system that produces 10,000 data points/day, we can shift to lightweight summative sampling strategies like NAEP.

Some have suggested item banks and item sharing like MasteryConnect which is great, but it keeps formative assessment separate from instruction. I'm most interested in encouraging use of assessment embedded in instruction. Take New Classrooms for example, they give an external assessment at the end of the period rather than using the assessment data embedded in the tutoring/games/activities because they don't have a simple way to use the data.

Having the consortia build a lexile-like frame is probably best but it will take another 3 years...